To see a list of all previous events, check out this page.

- This event has passed.

Julia Navarro PhD defense.

Following Monday 2nd of December, 2019 Julia Navarro will defend her PhD Thesis, supervised by Dr. Antoni Buades Capó. The defense will take place in the degree room of building Antoni Maria Alcover i Sureda, UIB.

Title: Multi-view imaging: depth estimation and enhancement.

Author: Julia Navarro

Director: Dr. Antoni Buades

Department: Matemàtiques i informàtica

PhD program: Information and Communication Technologies

Date: 02/12/2019

Abstract:

Multi-view imaging is the process of using multiple cameras to capture several pictures from the scene. In this thesis, we have studied the problems of depth estimation and spatio-angular super-resolution given multiple images of the scene.

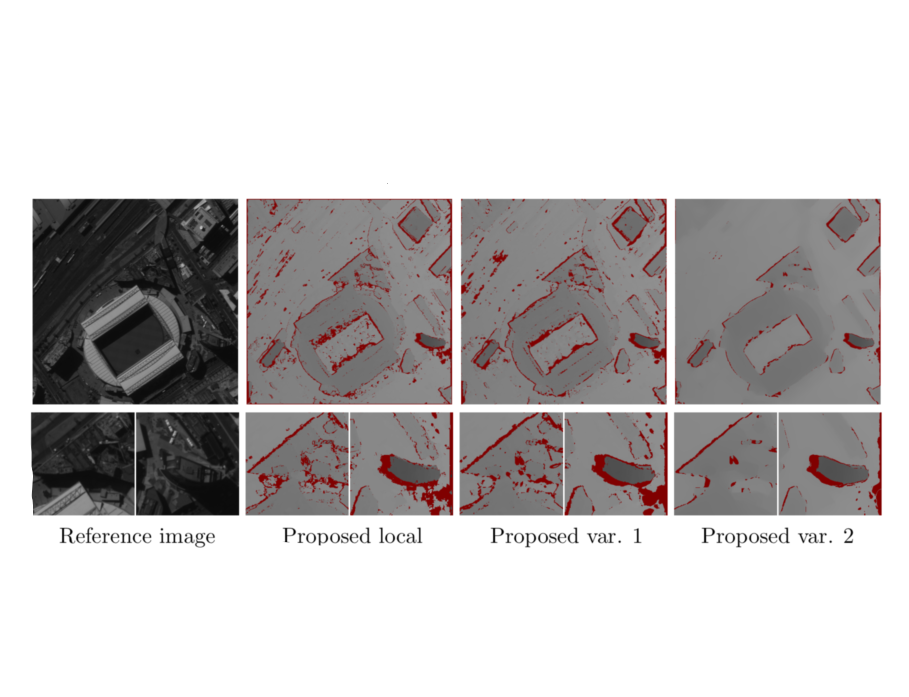

We first focus on the two-view case and develop a novel approach for depth estimation. We propose the combination of local and global strategies. In the local stage we adopt an adaptive support weights approach in which the weight distribution favours pixels in the block sharing the same displacement with the reference one. Compared to state-of-the-art algorithms, which make these weights only depend on the image configuration around the reference pixel, we propose a weight function that additionally depends on the tested disparity. Since the disparity function is unknown, we give more weight to those pixels in the block matching with smaller cost, as these are supposed to have the tested displacement. A multi-scale strategy and validation criteria are used to only keep reliable matches and provide a robust estimation. Then, we propose the use of a global filtering and interpolation stage. We present two different variational methods for this purpose: an approach based on optical flow formulations and a model that combines total variation and non-local regularization. These two variational methods increase the precision of the local estimation.

The two-view stereo method is then extended to depth recovery from a light field image. Light field images can be considered as a collection of 2D images acquired from different viewpoints that are arranged on a regular grid. We exploit this configuration and compute two-view disparity maps between specific pairs of views, using the proposed two-view stereo approach. These two-view disparities are robustly combined to obtain a unique and accurate estimation.

Then, we study the super-resolution problem for the multi-view setting in both spatial and angular dimensions. The spatial super-resolution approach is applied to videos, light fields and depth videos. Where in the last case we assume the availability of the corresponding high-resolution optical frames. The proposed method comprises inter-frame registration, upsampling and deconvolution. The upsampling strategy combines patches from several frames not necessarily belonging to the same pixel trajectory. The selection of these patches is robust to flow inaccuracies, noise and aliasing. For deconvolution, we propose a variational model which combines total variation with non-local regularization.

Finally, we present a solution to angular super-resolution for light field images. Specifically, we propose a learning-based approach that, from the four corner sub-aperture images, synthesizes the center one. We use three sequential convolutional neural networks for feature extraction, scene geometry and view selection. Compared to state-of-the-art approaches, we particularly treat occlusions by letting the network to estimate a different disparity map per view. Jointly with the view selection network, this strategy shows to be the most important to have proper reconstructions near object boundaries. The method, which is initially tailored and tested on plenoptic light fields, is also adapted and tested on wide-baseline light fields.